Ex-User (9086)

Prolific Member

- Local time

- Today 7:56 PM

- Joined

- Nov 21, 2013

- Messages

- 4,758

MC G is here with da truth for y'all.

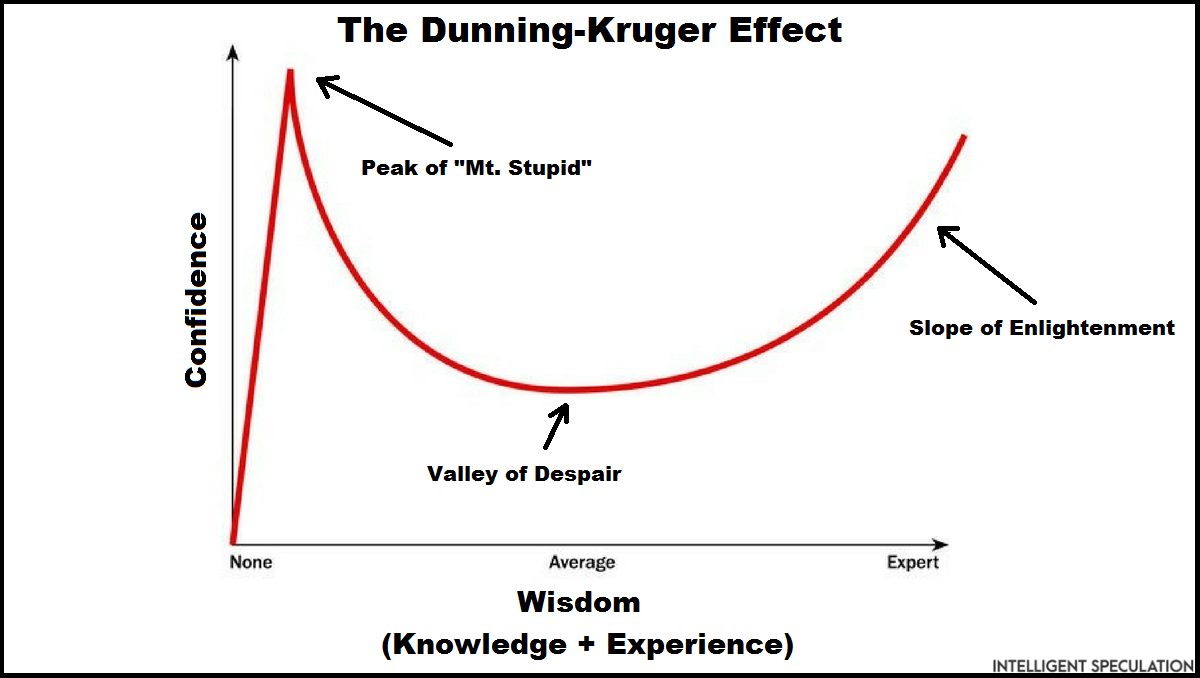

The original Dunning–Kruger graph is wrong and misleading.

It's possible to get the Dunning-Kruger effect without gathering any data at all. Just random noise results and assuming everyone has equal confidence. Here's an article explaining why the effect is autocorrelation over mutually dependent variables.

economicsfromthetopdown.com

economicsfromthetopdown.com

It plots quartile score on x axis, percentile score on y axis and self-assessment of results is also a function of score x which everyone gives as better than average.

It plots quartile score on x axis, percentile score on y axis and self-assessment of results is also a function of score x which everyone gives as better than average.

STOP and think for a second what are they doing? Let's say results are represented as variable R. So on x axis they plot R as quartile, on y axis they plot R as percentage and they look at self-assessment of R as Rs(R self-assessed) as a difference of Rs minus R. That's just a lot of one variable plotted everywhere, should ring alarm bells in your heads.

Just assume that everyone thinks they scored 60%. We would get a y=60% line (we don't gather any data, just input arbitrary 60%). Marked in red. This alone can be used to incorrectly show that even if everyone has identical confidence levels the incompetent ones are "overconfident". First of all that's obvious without any research, because by definition low R and high Rs equals "overconfident". Except the level of underconfidence or overconfidence depends only on the arbitrary test's difficulty. Increase the difficulty or make the answers random and everyone will be shown as overconfident.

In plain english the graph is saying that there has to be a group of people whose confidence is higher than their competence. But what the graph shows is that incompetent people are the least confident and the competent people are the most confident.

The "overconfidence" is caused by high average confidence of every human being. Honestly high average confidence is an absolute necessity for doing anything, otherwise people would not attempt to tackle any problems at all for fear of failure or due to their perception of poor results and they would never get better. Humans should be grateful for this positive bias.

If there is anything useful to say about the graph it is that:

1. Everyone has a similar amount of above average confidence, regardless of competence. This is well explained by the "better-than-average-effect" https://en.wikipedia.org/wiki/Illusory_superiority

2. Predicting results requires competence and scoring high requires competence. There is some effect competence has on increasing the confidence even further.

3. It took more than a decade for criticisms of this effect to start appearing. A lot of wrong, useless research is cited and abused by scientists without verification. This bad misleading research circulates in the public and leads to misinformation.

This thing is made-up, created in paint.

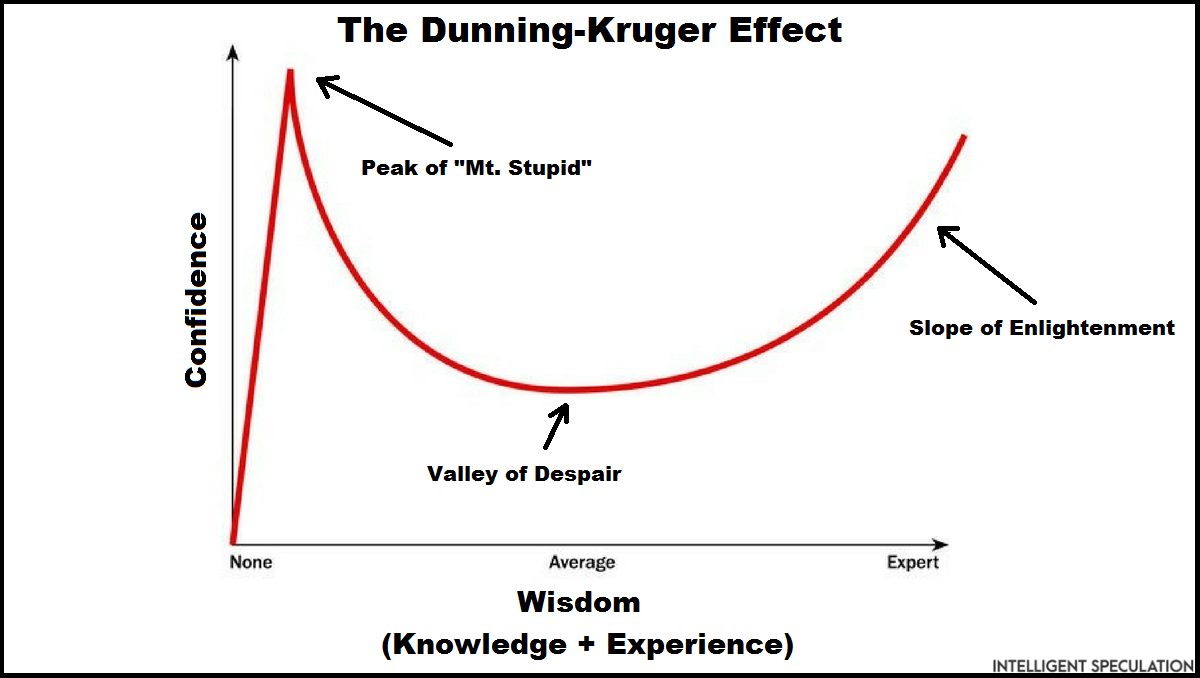

The original Dunning–Kruger graph is wrong and misleading.

It's possible to get the Dunning-Kruger effect without gathering any data at all. Just random noise results and assuming everyone has equal confidence. Here's an article explaining why the effect is autocorrelation over mutually dependent variables.

The Dunning-Kruger Effect is Autocorrelation – Economics from the Top Down

Do unskilled people actually underestimate their incompetence?

economicsfromthetopdown.com

economicsfromthetopdown.com

STOP and think for a second what are they doing? Let's say results are represented as variable R. So on x axis they plot R as quartile, on y axis they plot R as percentage and they look at self-assessment of R as Rs(R self-assessed) as a difference of Rs minus R. That's just a lot of one variable plotted everywhere, should ring alarm bells in your heads.

Just assume that everyone thinks they scored 60%. We would get a y=60% line (we don't gather any data, just input arbitrary 60%). Marked in red. This alone can be used to incorrectly show that even if everyone has identical confidence levels the incompetent ones are "overconfident". First of all that's obvious without any research, because by definition low R and high Rs equals "overconfident". Except the level of underconfidence or overconfidence depends only on the arbitrary test's difficulty. Increase the difficulty or make the answers random and everyone will be shown as overconfident.

In plain english the graph is saying that there has to be a group of people whose confidence is higher than their competence. But what the graph shows is that incompetent people are the least confident and the competent people are the most confident.

The "overconfidence" is caused by high average confidence of every human being. Honestly high average confidence is an absolute necessity for doing anything, otherwise people would not attempt to tackle any problems at all for fear of failure or due to their perception of poor results and they would never get better. Humans should be grateful for this positive bias.

If there is anything useful to say about the graph it is that:

1. Everyone has a similar amount of above average confidence, regardless of competence. This is well explained by the "better-than-average-effect" https://en.wikipedia.org/wiki/Illusory_superiority

2. Predicting results requires competence and scoring high requires competence. There is some effect competence has on increasing the confidence even further.

3. It took more than a decade for criticisms of this effect to start appearing. A lot of wrong, useless research is cited and abused by scientists without verification. This bad misleading research circulates in the public and leads to misinformation.

This thing is made-up, created in paint.